A robot coach for the elderly

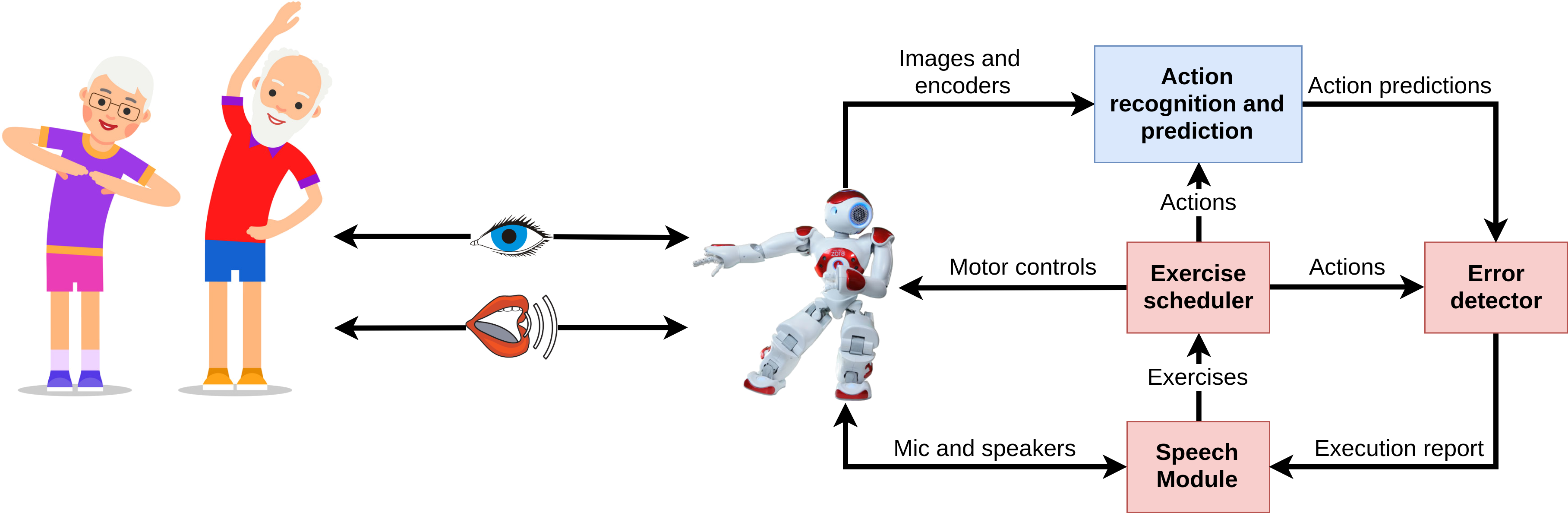

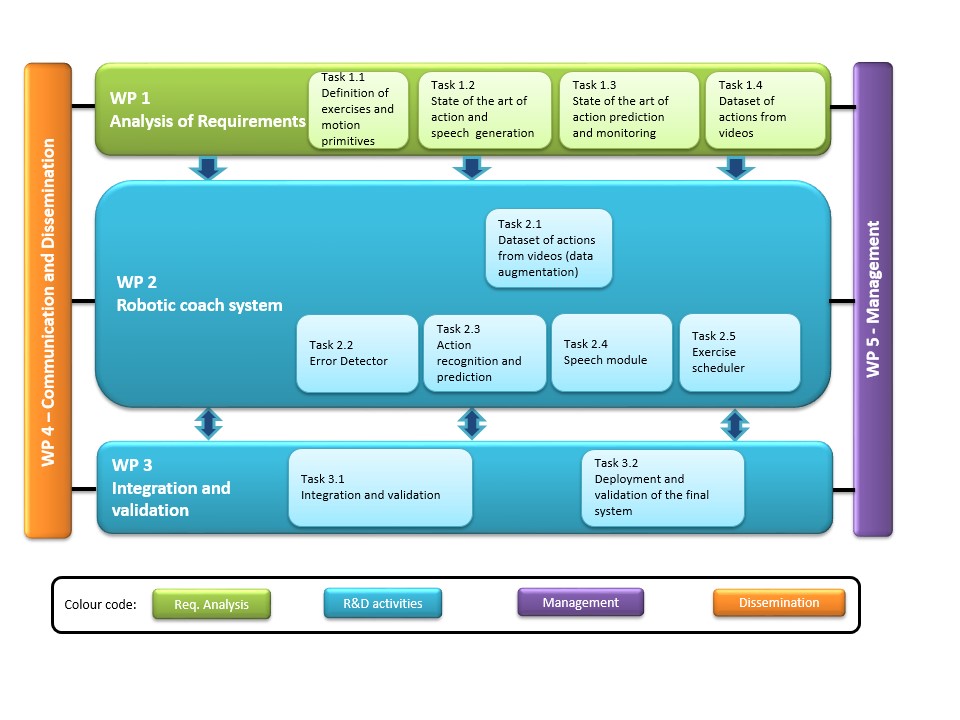

Physical activity for older adults prevents numerous diseases and increases quality of life. The EU-funded Dr VCoach project will design and deploy a robotic coach to assist elderly people during daily physical training. Specifically, the robot will understand verbal commands (e.g. "Today, I feel tired, can we do a lighter training?"), define and show the exercises to the user, monitor their performance and suggest improvements. The entire system will consist of a speech module, an exercise scheduler, an error detector, an action recognition and a prediction module. It will use RGB cameras and advanced deep learning systems implemented on a humanoid robot equipped with a middleware layer that makes it programmable by non-technical users.

Objectives

The main objective of this project (DR VCoach) will be the following: “Design and implement a robotic coach able to propose a proper exercise schedule based on human directives, monitor the exercise performed by the patient/elder and correct it in case of mistakes”. The output of the project will be a robotic coach to assist the elders during their daily physical training. The robot will be able to understand verbal commands from the user (e.g. what will be the training schedule of today? Today I feel tired, can we do a lighter training?), to define the sequence of exercises to be performed, to show the exercises to the user (with a verbal description and performing them by it-self), to monitor the user performing the exercises using RGB cameras, to eventually find some errors in the execution and to suggest a correction to the mistake.

The whole system can be divided into four modules:

- Speech module: This is the module in charge of the vocal interaction between the robot and the elders.

- Exercise scheduler: The role of this module is to break the selected exercise into atomic actions, and send these actions to the Error detector and Action recognition and prediction modules.

- Error detector: The Error detector module analyses the results coming from the Action recognition and prediction module based on the required actions received by the Exercise scheduler. After evaluating the performed action, the module sends an evaluation report to the speech module in order to inform the elder.

- Action recognition and prediction: This module has the dual task of recognising the action performed by the elder using the RGB videos coming from its embedded camera, and predicting the future viso-proprioceptive stimuli based on the action that is being performed.

The system will be implemented on Zora (a NAO robot with a software layer to make it usable by non ICT people).

Team

Nino Cauli

Principal Investigator

Diego Reforgiato Recupero

Coordinator